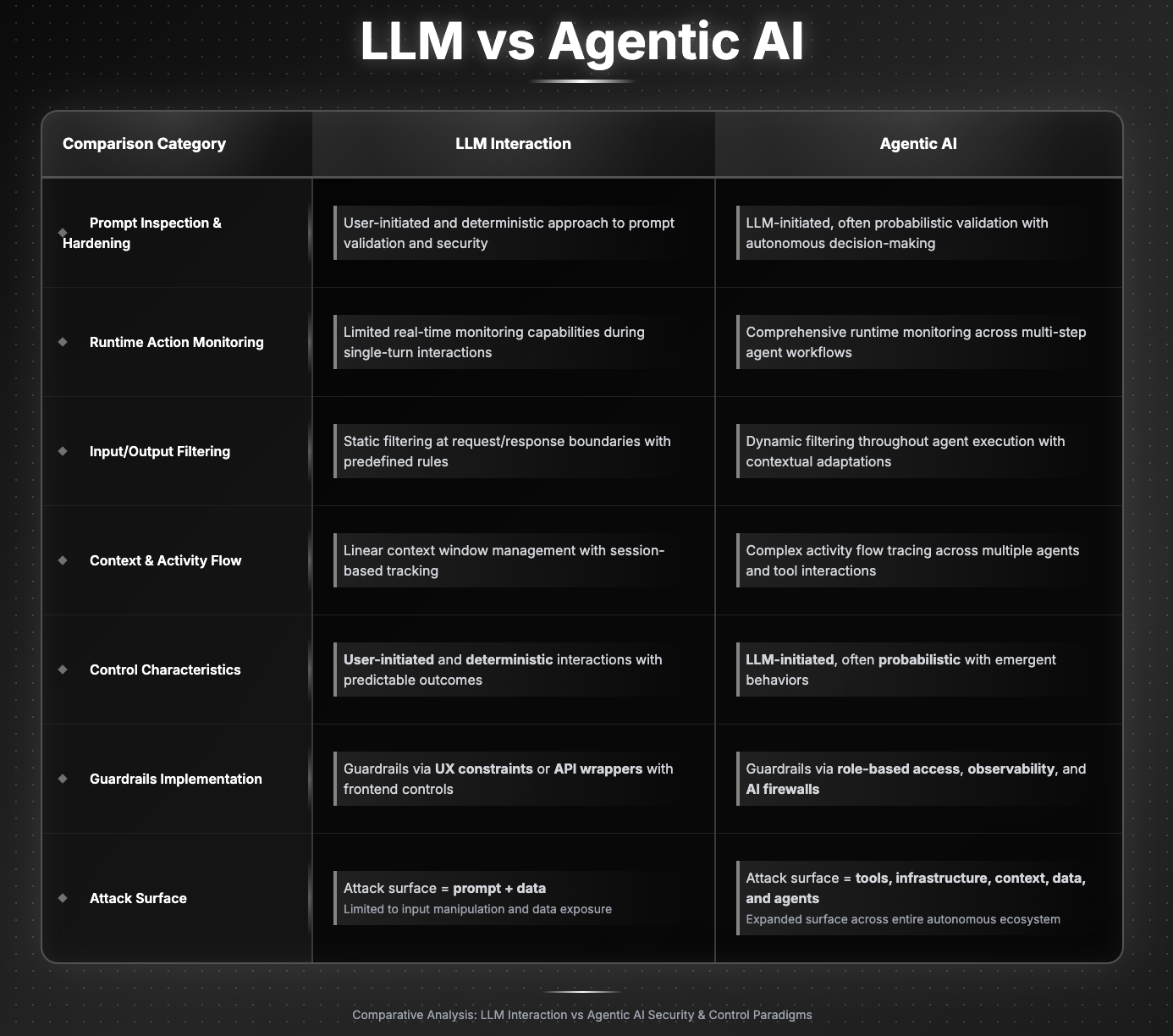

In the first wave of enterprise AI adoption, organizations focused on unlocking productivity gains by integrating large language models (LLMs) into existing workflows and functions. Security and governance concerns centered largely around data leakage through prompt misuse, privacy violations, or misuse of publicly available LLMs. But the game has changed.

The emergence of AI agents—autonomous or semi-autonomous systems that can take actions, make decisions, and interact with systems—ushers in an entirely new set of security imperatives. The shift from user-driven prompts to action-driven software flows means security teams must adopt a radically different approach. Agentic AI introduces new attack surfaces, new operational complexity, and a greater potential for harm.

In this blog, we point out what's different about agentic AI security, and what those differences mean for enterprise security strategy.

User-Driven AI: LLM User Interaction Protection and Governance

When users engage with a traditional LLM, the workflow is largely linear and contained. A user submits a prompt, and the LLM responds with text. Likewise, the security concerns related to an enterprise LLM that is pulling data using Retrieval Augmented Generation in response to a user prompt, is that the action is governed by guardrails, and is in line with policies for appropriate usage of data.

Security concerns focus on:

- Prompt Injection (e.g., leaking sensitive internal documents)

- Data Privacy (e.g., exposing PII through model queries)

- Usage Governance (e.g., restricting certain language, safe and appropriate functions)

These risks, while non-trivial, are tractable within existing security frameworks—largely through input/output filtering via an inspection mechanism, prompt hardening and protection through policy enforcement, and usage monitoring.

Agentic AI: Autonomous Actions, Expanding Risk

Agentic AI, on the other hand, doesn’t just generate text—it acts. AI agents are software entities driven by LLMs that can execute code, call APIs, perform database queries, trigger workflows, and even make decisions based on real-time inference or pattern recognition.

This autonomy introduces a set of novel and complex risks:

- Action-Level Exploits: Agents can go ‘rogue’, or be misled into executing malicious or unauthorized actions, like modifying a production database or sending internal data to external APIs.

- Context Injection Attacks: Agents using RAG (retrieval augmented generation) can be manipulated to leak sensitive data or perform harmful actions based on trusted and untrusted data sharing context

- Observability and Lack of Guardrails: Agents can operate in the background with little visibility, unless robust observability is built in.

- MCP Risk Surface: Protocols like the Model Context Protocol (MCP) expand agent reach across systems— with default open-ended permissions.

While still in the early stages of adoption, we have seen a few examples of agent exploits:

- A hacker compromised Amazon Web Services’ Amazon Q code assistant for Visual Studio Code with a wiper-style prompt injection planted by a hacker

- Aim Labs has disclosed the EchoLeak and CurXecute vulnerability that exploits the ‘lethal trifecta’ to execute a zero click context injection attack

The EchoLeak and CursorXute vulnerabilities involve what we refer to as the lethal trifecta. The lethal trifecta is the combination of three agent attribute agents:

- Access to internal data

- Ability to communicate externally

- Exposure to untrusted data

Whenever an agent has these three attributes, it's exploitable. However, most agents should have these three in order to be effective. To build guardrails that limit the agent’s ability to act on untrusted and internal data in a shared context, we need the ability to follow the full chain of thought of the agent and learn about the tool calls and actions the agent performs.

However, just as the risk is structural, the approach to prevention can be platform agnostic - as long as there is a mechanism to govern how the agent interacts with the LLM that draws on the research done by Aim Labs on these agentic vulnerabilities.

Understanding the attack surface: An initial agent taxonomy

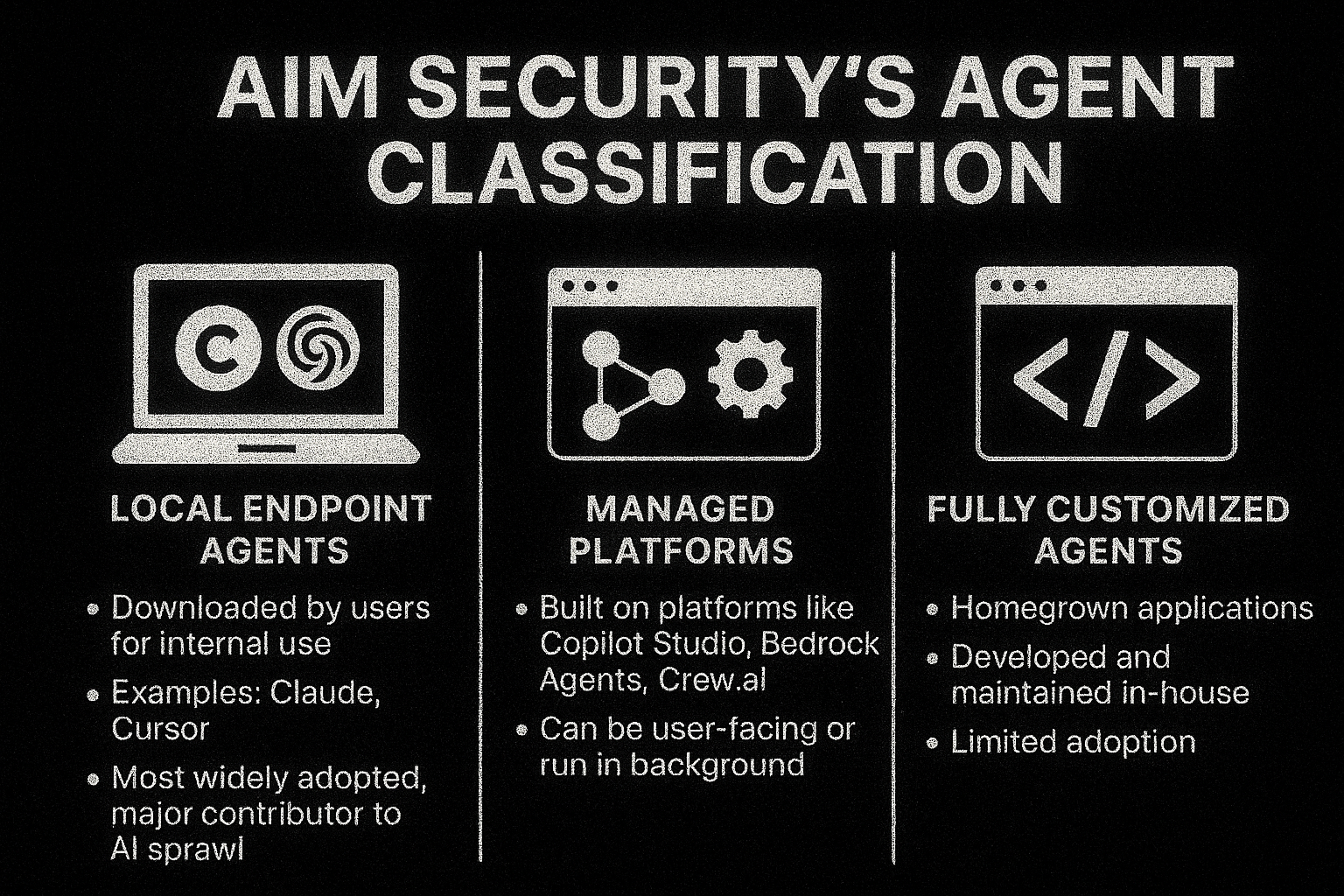

As Aim, we delineate between AI you use - third party AI applications - and AI you build, the development and deployment of custom AI applications.

When we turn to the world of agentic AI, the clear delineation is not as clear, and a more specific taxonomy is helpful to guide security and risk considerations. In order to implement a realistic strategy, AI governance and security teams need to take into account the specifics of what operations agents perform, the degree to which the agent is autonomous or user directed, and what resources they interact with, which data sets the agents can access, and what environment they run in.

By compiling an agent inventory and AI endpoints using a consistent taxonomy, security teams can take the next step by tracing agent activity - monitoring how the agent acts, and generating more context to assess the risks associated with its posture and behavior.

Equally, enterprises will likely use a range of AI agents, and the scope for security teams to apply controls may be limited, or constrained by business considerations.

For example, security teams should have the scope to enforce controls for a homegrown agent built using Langchain for a financial services application, but are unlikely to be directly involved with policies for use of a Microsoft 365 Copilot agent for a marketing use case.

Aim Security classifies agents into three main types:

Agent Taxonomy: Understanding the Blast Radius

Not all agents are equal.

Security and governance teams must profile agents based on:

- How they are initiated (human-triggered vs. autonomous)

- Where they are deployed (local, SaaS, self-hosted)

- Connected systems (internal APIs, third-party endpoints, MCP servers)

- Level of autonomy and trust (what do they have access to, and should they have access)

For example, a local coding assistant running in a dev environment poses less risk than a background agent running inference across production systems. Understanding this taxonomy helps prioritize controls.

Security Approach: Deterministic vs. Dynamic

Building on the definition of AI agents as autonomous and operating independently, security teams face an entirely new challenge.

If they want to take a deterministic approach using predefined policies and controls of what the agent can and can’t do, they need to tightly scope what the agent can connect to, and which actions it can perform.

However, because the motivation for adopting AI agents is both to improve productivity, as well as leverage inference, reasoning and RAG (retrieval augmented generation) for more efficient and informed decision making, security frameworks have to take into account that agents can behave in unexpected, probabilistic ways.

Traditional LLM governance is reactive—blocking dangerous queries or limiting output. Agentic AI requires proactive strategies: mapping agent behavior, validating intent, and controlling execution in real time.

Toward an Agentic AI Security Framework

Organizations need a tailored security architecture for agentic AI. Key components include:

- Discovery & Profiling: Inventory of agents, their lineage, and connections

- Agentic Posture Management: Tooling risk analysis, data access scoping, and identity posture

- Observability: Action-level logging, tracing, and insight for governance teams

- Runtime Controls: Contextual risk detection, prompt-based exploit mitigation, and role-scoped action control

A one-size-fits-all approach from traditional LLM security is insufficient.

Redefining Enterprise AI Risk

The move from the classic AI application to dynamic, reasoning agents transforms AI. And that transformation demands a rethinking of security.

Enterprises embracing agentic AI must understand that they’re no longer just protecting data—they’re managing autonomous software flows with the power to act on behalf of users or even themselves. That shift redefines threat models, expands the attack surface, and elevates the need for contextual, real-time, and adaptive security strategies.

Agentic AI isn’t just smarter AI. It’s AI with agency. And with agency comes risk—and responsibility.

For a more in-depth discussion and action plan for agentic AI security, download our white paper here.

.png)