Artificial Intelligence is reshaping how enterprises use technology - and at a pace and breadth that is unprecedented. The pace and breadth of adoption create new attack surfaces, introduces risks like prompt injection attacks and data leakage especially through agentic AI, and often results in governance blind spots. While the pace of adoption creates urgency, the breadth of adoption poses a more fundamental, organizational challenge for the role of security teams.

Securing and governance of AI is no longer a niche specialization — it’s a cross-functional mission that touches data, applications, infrastructure, compliance, and the business itself. The question isn’t just how to secure AI, but how to build secure pathways to adoption and risk mitigation strategies. that are sustainable and collaborative.

With the right strategy, tools, collaboration, and participation in forums like AI governance committees, security leaders can lead the AI transformation through secure enablement and governance enforcement. When aligned, they transform security from a defensive discipline into a strategic enabler of AI-driven growth.

In this new context, the structure, the composition, and the skill sets of the security organization have become a critical consideration in many enterprises’ overall AI adoption strategy.

Building the Right Leadership Structure for AI Security

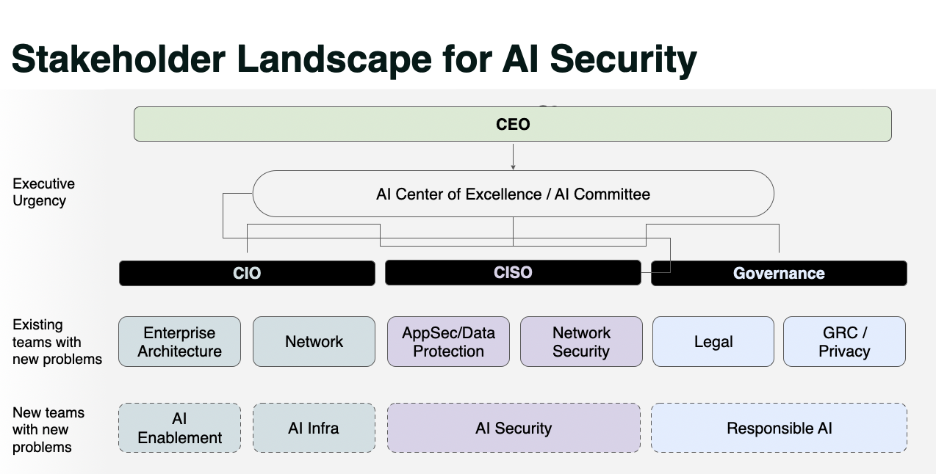

Many CISOs have lived through significant technology transitions, whether the move to digital transformation that reshaped the role of data security; or, the widespread adoption of DevOps and cloud services that impacted application and infrastructure security. These transitions can serve as a frame of reference for how to respond to the adoption of AI. However, because AI is pervasive – creating new problems for existing security teams and establishing new teams with entirely new security demands – CISOs need to formulate a more comprehensive strategy that reinforces their relevance.

The approach we have seen to be the most effective is to establish an AI Security Lead that is both responsible for AI within the security organization, but also with the skillset and breadth of domain expertise be a security advocate and partner for each function impacted by AI as well: from compliance and legal, to engineering, data science and infrastructure.

The pattern we have observed at large enterprises is that a deputy CISO will often step into this new role. Alternatively, the AI Security lead can be drawn from the ranks of the Data Security Lead, Cybersecurity Architect, AppSec Lead, Information Security Manager or Principal Security Engineer

While their background is important, the most effective AI security leaders are the ones who recognize that their influence depends less on command and control, and more on connectivity and coordination.

Equally, the AI Security lead must help existing security teams adapt effectively, with a clear set of objectives that are aligned with the urgency and the scope of the enterprise’s overall AI adoption strategy. To remain relevant, security teams must be able to define and deliver a set of policies and pathways to mitigate, identify and address risk from evolving AI adoption – with agentic AI only the latest example.

Embedding this leadership within existing security structures—while maintaining strong ties to enterprise architecture and the AI governance committee or AI Center of Excellence—ensures security scales with innovation rather than lagging it.

The AI Security Leader’s Role

The role of the AI Security Lead is emerging as a critical junction point in this new architecture.

This individual doesn’t replace the CISO — they extend their reach into the rapidly evolving intersection of security, data, engineering, data science, and innovation.

The most effective leaders in this space balance strategic fluency (understanding regulatory and business implications) with technical credibility (command of AI models, pipelines, and threat landscapes). They translate governance principles into engineering practices and, just as importantly, translate technical risk into board-level dialogue.

Collaboration is key: the AI Security Lead must bridge GRC, Legal, Cloud Security, and Data teams to ensure that policies are clearly defined and consistently enforced – with consolidated auditing and reporting.

The AI Security Lead embodies the new reality that AI security is not purely technical — it’s organizational.

The AI Security Leader: New Role, New Responsibility

The evolution of AI demands a dedicated AI Security Lead — not just a technical expert, but a bridge-builder across domains.

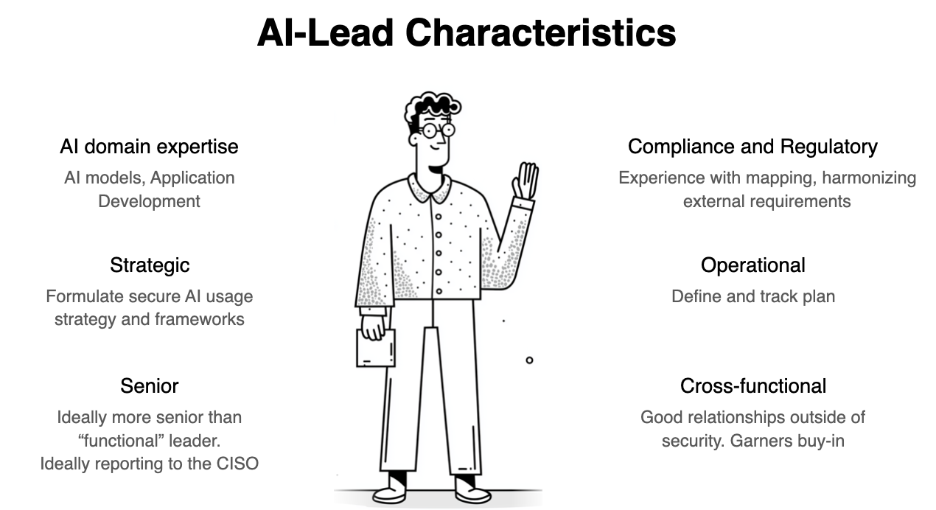

Based on our observations and feedback from organizations adopting AI at scale, this leader should be:

- Senior: Reporting to the CISO or higher, ensuring authority to set direction across silos.

- Strategic: Responsible for formulating the organization’s AI usage strategy, aligning it with enterprise risk and innovation goals.

- Operational: Capable of driving execution through plans, frameworks, and measurable objectives for teams within the security org

- Cross-Functional: Skilled in building trust and collaboration across data science, legal, compliance, and IT.

- Regulatory Fluent: Equipped to map global and emerging AI regulations (like the EU AI Act or NIST AI RMF) to internal policy frameworks.

This leader becomes the nucleus of the AI security function — setting standards, defining metrics, and shaping governance that balances control with creativity.

AI security leads should work with data security teams to ensure sensitive and regulated data is governed, redacted where policies require it, and protected against leakage or poisoning. In turn, data security tools can be extended to enforce compliance with evolving AI governance frameworks, based on guidance from AI governance to the AI security lead.

In turn, AI security leads can work with development leads and with application security teams to integrate AI model testing and security posture management into the software development lifecycle – especially as agentic AI adoption deepens. Those relationships can then also be used to move to the next step – implementing guardrails and AI firewalls for runtime protection for application owners as applications are moved into production.

Likewise, for cloud and infrastructure security teams, AI leads can guide them on how to help implement new detection and response strategies for AI-driven threats.

Ultimately, AI security leads enable these teams to move from reactive defense to proactive assurance—embedding security into every layer of the AI ecosystem.

Governance as the Glue

Governance has become the mechanism that links the distributed parts of the AI ecosystem together — providing visibility, accountability, and alignment. In our previous blog, we outlined how security and governance teams can function symbiotically.

When structured effectively, governance serves as both a stabilizer and an accelerant: stabilizing the risks introduced by AI proliferation, while enabling teams to innovate within well-defined boundaries.

Security leaders increasingly find themselves not only designing controls but also designing the conversations that bring these functions into alignment — between Legal and Engineering, between Data and Infrastructure, between risk and revenue.

The Security Role in the AI Steering Committee

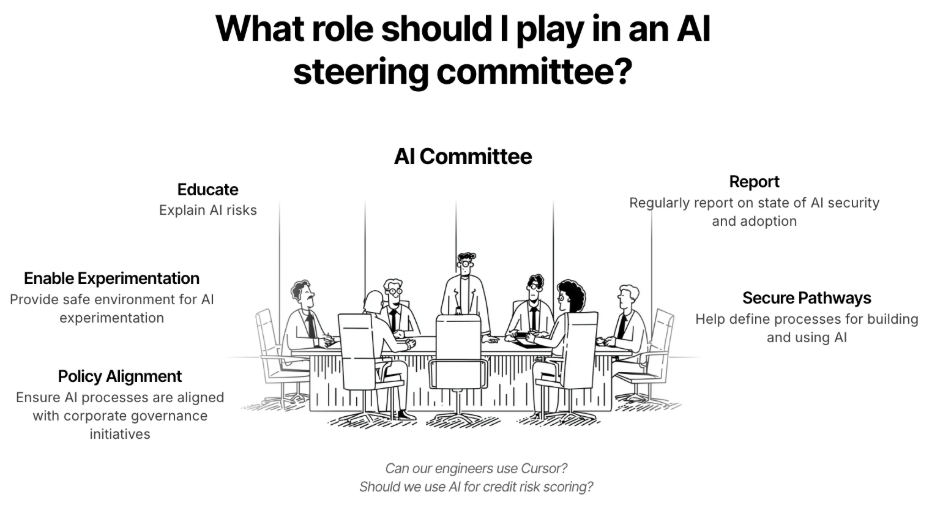

Involvement in the AI steering committee allows the security function to influence AI strategy at its inception rather than in reaction to it. The CISO’s mandate here extends beyond control—it’s about education, enablement, and oversight.

Security leaders should guide the committee in establishing safe experimentation environments, defining secure development pathways, and aligning AI projects with corporate governance and risk policies.

Regular reporting on AI adoption metrics and risk trends reinforces transparency, while clear policy alignment ensures that business units innovate within guardrails that protect data integrity and regulatory compliance. Through this approach, security becomes an essential partner in shaping trustworthy AI governance.

Communicating AI Adoption Versus Risk to Leadership and the Board

CISOs today face a complex balancing act: championing AI-driven innovation while managing an expanding spectrum of risks. Executive teams and boards are increasingly eager to harness AI’s transformative potential, yet they often lack visibility into its risk posture.

Security leaders are uniquely positioned to bridge this gap—translating technical threats into business language and measurable KPIs.

By framing AI security around data protection, compliance readiness, and operational resilience, CISOs can help boards make informed, risk-based decisions about AI investments. The most effective leaders adopt a dual narrative: one that quantifies both potential risk and business reward, enabling security to be viewed not as a blocker but as a strategic accelerator of secure AI adoption.

Conclusion: From Guardians to Enablers

The future of cybersecurity leadership lies in cross-functional symbiosis — where AI, security, and governance move in lockstep.

CISOs are uniquely positioned to drive this transformation: shifting from blocking AI to enabling it safely. The winning organizations will be those that treat AI security not as a constraint, but as the foundation for innovation, trust, and competitive advantage.