California has long served as a bellwether in tech regulation, and 2025 is no exception. With several new AI‑related laws and rules now on the books, companies operating in or serving the state must move quickly to stay on the right side of compliance. For security leaders and C‑suite executives, this presents not just a regulatory test, but also an opportunity — to embed guardrails into AI adoption, build trust, and differentiate on safety.

In this post, we’ll walk through the key changes in California’s approach to AI, explore how federal and cross‑state trends may evolve, and show how Aim Security’s capabilities map to those regulatory challenges.

The New California AI Landscape: Key Changes to Watch

1. ADS / Employment Use Regulations (Effective October 1, 2025)

California’s Civil Rights Department (CRD) issued final regulations clarifying how existing anti-discrimination law (FEHA) applies to AI and automated decision systems (ADS) in personnel decisions.

- If AI is used in screening, hiring, promotions, pay, or other employment functions, it must not result in bias or disparate impact on protected classes.

- Employers must:

• Identify all ADS systems in use

• Maintain records (e.g., four years’ retention) of decision inputs and outcomes

• Perform bias testing and document mitigation efforts

• Update anti-discrimination policies to include ADS oversight

These rules effectively place AI systems under the same spotlight as human decision makers: accountability, transparency, and auditability will be essential.

2. The Transparency in Frontier AI Act (SB 53)

Governor Newsom signed SB 53 (Transparency in Frontier Artificial Intelligence) into law in late 2025, making California one of the first states to impose transparency obligations on large AI developers.

Key elements include:

- Requirement to publish high-level safety policies, risk assessments, and redacted protocols

- Mandatory reporting of “critical safety incidents” (e.g. misuse, unexpected behavior) within defined windows

- Whistleblower protections so that employees can raise safety concerns without fear of retaliation

Importantly, SB 53 doesn’t simply regulate small chatbots or tools; it targets “frontier” AI systems with significant scale and novel behavior.

3. AI Transparency, Watermarking, & Deepfake Laws

The 2024–2025 legislative session saw a wave of laws aimed at content origin, disclosure, and limits on harmful content:

- Requirements for watermarking AI-generated images or embedding metadata to trace AI-origin content

- Mandates that AI systems disclose when a user is interacting with an AI vs. a human (especially for conversational agents)

- Limits on deepfake or deceptive content that could mislead individuals or harm reputation

The message is clear: consumers must not be misled, and systems must carry transparency signals.

4. Legal Advisories & Existing Law Applications

Beyond new statutes, the California Attorney General issued advisories reminding businesses that existing law already imposes obligations when deploying AI — especially around privacy, fairness, and discrimination.

Plus, AI use in regulated sectors like healthcare is already subject to additional rules (e.g. data protection, algorithmic accountability).

From Compliance to Competitive Differentiation: What It Means for Security Leaders

These rules are not merely legal challenges — they signal how security, governance, and compliance must converge. For C‑suite and security professionals, a few implications stand out:

- Observability is non‑negotiable. You can’t manage what you can’t see. The new rules demand visibility into AI decision logic, training data, inputs/outputs, and incident logs.

- Policies must be enforceable, instrumented, and traceable. It’s not enough to say “don’t discriminate” — the system must enable rule definition, detection, enforcement, and audit trails.

- Incident readiness is required. With “critical safety incident” reporting now mandated, preparedness for unexpected model behavior or misuse is essential.

- Transparency strategies must scale. If you’re a large AI provider, you’ll increasingly be asked to publish safety strategies, handle whistleblower complaints, and respond to public scrutiny.

- State regulation is accelerating. California is just one high bar — other states will likely follow. A platform built for modularity and flexibility in compliance will fare better in this evolving landscape.

How Aim Security Aligns to California’s AI Regulation Challenges

Aim Security’s core mission — securing and governing every AI interaction — strongly aligns with the demands of regulatory compliance. Here are key capabilities and how they map to California’s new regime:

1. Full Visibility & Governance Across AI Use (Shadow & Intentional)

Aim detects GenAI applications, whether explicit or shadow, and provides governance over them.

- Why it matters: California’s ADS rules require discovery of AI systems in use (including internal tools or third-party embedded agents). Without visibility, compliance gaps emerge.

- What Aim brings: You can inventory AI tooling, connect usage to risk profiles, and monitor behavior over time.

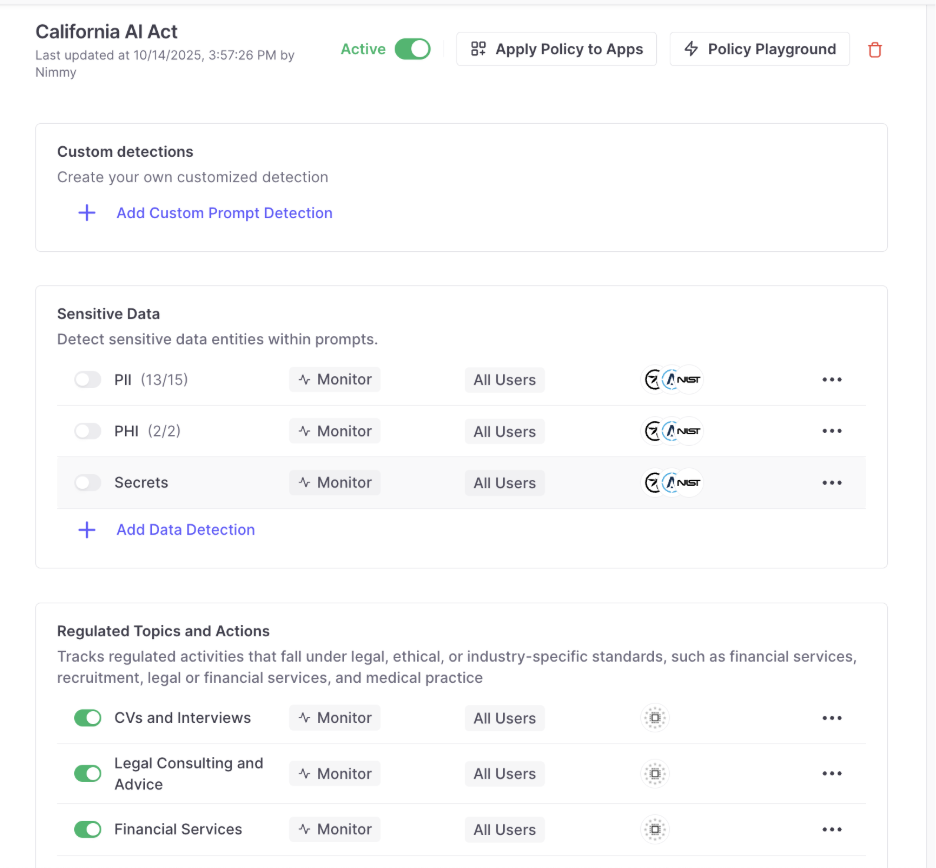

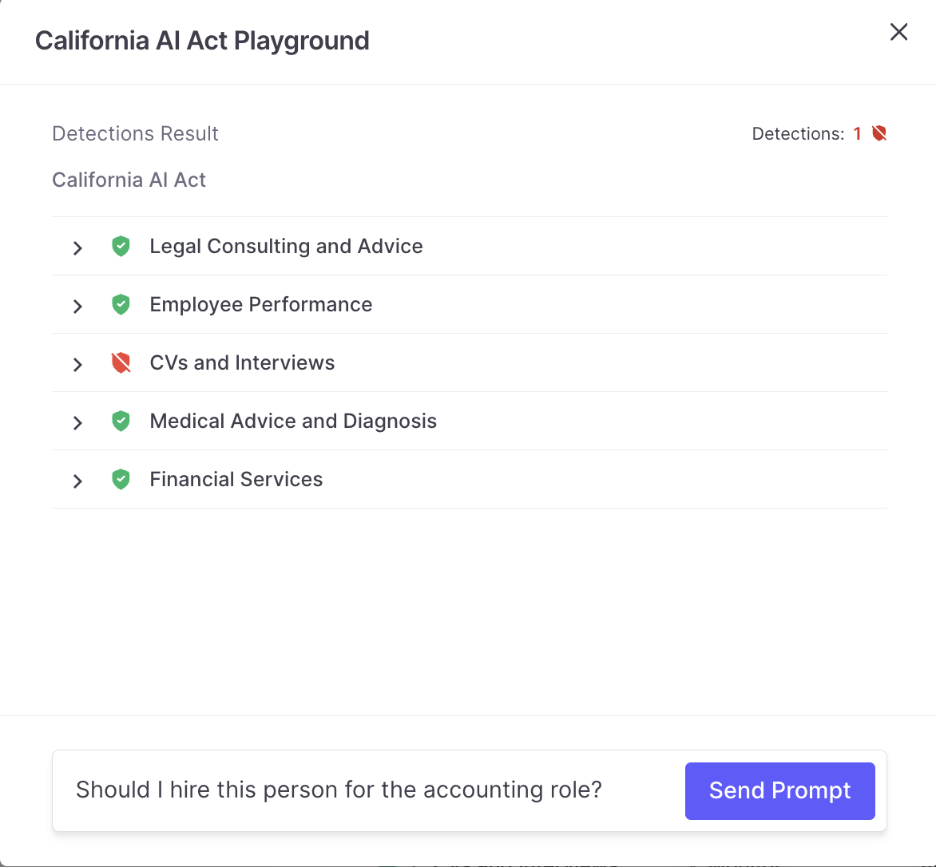

2. Policy Enforcement, Controls & Rule Automation

Aim supports enforcement of policy-defined guardrails – interceopting prompts and restricting certain inputs or outputs based on pre-defined or custom policies.

- Why it matters: Regulations demand systematic bias testing, input/output filtering, and enforceable control logic.

- What Aim brings: Codify rules (e.g., “no personal health data exposures,” or “flag high-risk content”) and have the platform enforce and/or flag violations automatically.

3. Audit Trails, Logging & Incident Tracking

Compliance requires record retention, traceability, and incident reporting — especially under SB 53 for “critical safety incidents.” Aim’s platform supports detailed metrics and logs of AI interactions.

- Why it matters: You must show not just that you’ve followed best practices, but that you can prove it.

- What Aim brings: Immutable logs of decisions, model calls, and policy violations form the basis of defensible audits.

4. Security & Risk Detection / Anomaly Monitoring

Aim builds detection mechanisms for misbehavior, anomalous inputs/outputs, prompt injections, and other threats.

- Why it matters: Even well‑intentioned AI systems can produce harmful or biased results; regulations expect monitoring and mitigation.

- What Aim brings: Alerts or automatic blocking when a model behaves unexpectedly, enabling proactive response.

5. Agent/Tool‑level Oversight

Aim offers visibility and control over AI agents (calls, reasoning, tool chains) deployed in your environment.

- Why it matters: As enterprises adopt autonomous agents, their decision chains and tool usage may be scrutinized.

- What Aim brings: You can trace and control each agent step, provide guardrails, and respond to regulatory or internal queries.

6.Enterprise-ready Unified AI Security Platofrm

Aim integrates with enterprise AI stacks and workflows.

- Why it matters: Regulation is not a point solution — you need consistency across tools, models, and business flows.

- What Aim brings: A single pane for visibility, governance and security across 3rd party, homegrown and agentic AI

Call to Action for Security Leaders & C‑Suite

For those operating in or serving California:

- Audit your AI estate. Identify every AI or automated system (even small internal tools) in use.

- Define your compliance policies. Tie your rules to legal requirements (e.g. no bias, data protection, transparency).

- Select an AI security governance platform. Choose one that handles visibility, enforcement, logging, and anomaly detection.

- Model tests and incident workflows. Simulate edge cases; define response procedures.

- Document everything. Logs, audits, decision paths, and governance changes must be retrievable.

- Plan for expansion. Adopt scalable, modular compliance practices.

At Aim, we believe that strong AI security is not an obstacle - it’s a foundation. When regulation demands transparency, accountability, and safety, organizations that have embedded governance from the start can move faster, minimize risk, and build stronger trust with customers, shareholders, and regulators.