Overview

AI has long ago moved beyond experimentation into nearly every business function, creating a need to address two connected areas: AI security and AI governance. Both aim to reduce risk and ensure responsible use, but they target different stages of the AI lifecycle and landscape.

Both require cross-functional collaboration across engineering, product, data, governance, and security teams. Governance translates regulations into controls, while security provides feedback on how well those controls work.

Yet, challenges persist. Too often, governance and security operate separately, duplicating work or working at cross-purposes, leading to fragmented risk management and oversight.

This post explores where governance and security overlap, where they differ, and how investment in one can strengthen the other.

AI Security: Protecting Data and Access

AI security focuses on protecting systems, data, and usage against unauthorized access and misuse. Its main goals are:

- Stopping AI attacks through user actions, rogue AI agents or attacker exploits, and identifying AI vulnerabilities in the AI stack before deployment, so as to prevent unauthorized access to models, data sets, workflows, systems, and infrastructure,

- Protecting sensitive data from being exposed during training, inference, or through user prompts, and prompt injection or jailbreak attacks.

- Preventing sharing of sensitive, proprietary or regulated data, particularly when AI is integrated into workflows across cloud platforms and third-party tools.

Security ensures that AI systems are technically resilient and do not become a vulnerability that attackers, or careless or malicious users, can exploit. Think of security as the “lock and alarm system” in your AI house. Without it, even the best-governed AI strategy crumbles.

AI Governance: Setting the Rules and Ensuring Accountability

AI governance provides the policies, frameworks, and oversight mechanisms that guide how AI should be developed and used. Its scope includes:

- Complying with regulations and frameworks such as the EU AI Act, NIST AI RMF, OWASP Top 10 for LLM, or industry-specific rules and state-specific legislation.

- Ensuring human oversight, so AI decisions do not become black-box judgments.

- Promoting ethical usage, including fairness, transparency, and non-discrimination.

- Aligning AI with organizational values and strategy so it becomes a driver of long-term business success rather than a source of unmanaged risk.

Whereas security is more about technology, governance is more about policies and accountability. Governance is the “rules of the road.” It tells us not just how to drive AI safely, but also where we’re allowed to go. Its importance lies in three areas:

- Risk mitigation - Poor governance exposes companies to legal liabilities, regulatory penalties, ethical lapses, and reputational harm. As AI regulations tighten globally, strong governance ensures compliance, reduces exposure to penalties, and demonstrates adherence to fundamental standards increasingly expected of AI-adopting organizations.

- Safe innovation - Governance frameworks allow companies to adopt AI quickly without fear of unethical or inappropriate misuse.

- Strategic alignment - By tying AI use to company values and goals, governance prevents fragmented adoption and ensures AI delivers measurable organizational value.

Without governance, AI may deliver short-term productivity boosts but create long-term chaos - as well accumulate technical and business risk debt.

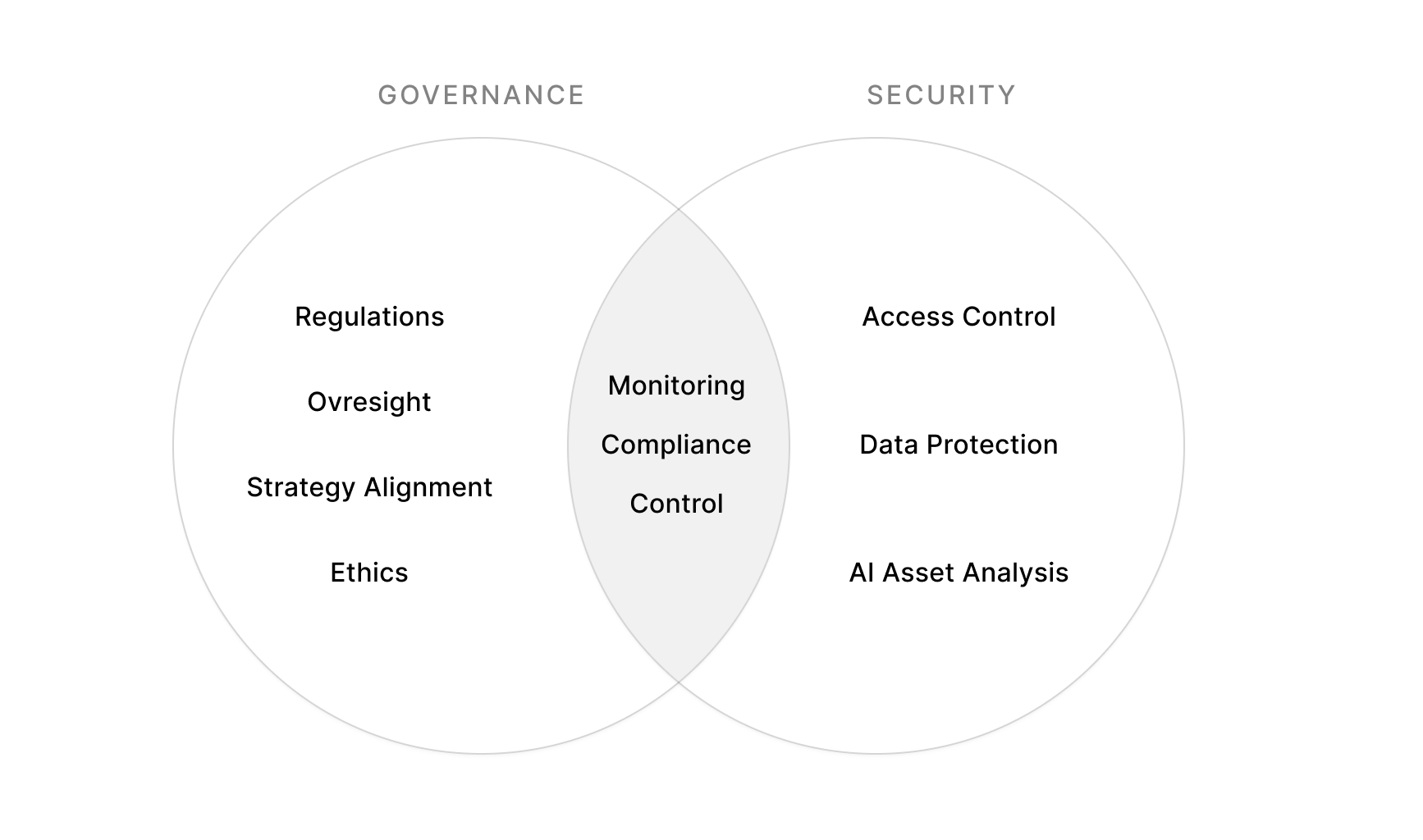

Where Security and Governance Overlap?

Despite their differences, security and governance often reinforce each other:

- Prompt protections that prevent sensitive data from being shared not only strengthen security but also enforce compliance with data privacy regulations.

- Agent security controls that prevent excessive access reduce agents' overexposure threats while ensuring AI systems operate in a fair and auditable way.

- Model scanning tools that document how models are trained and deployed improve both governance transparency and mapping security gaps where action is required.

In short: governance defines the “why and how”, while security implements the “how” in practice.

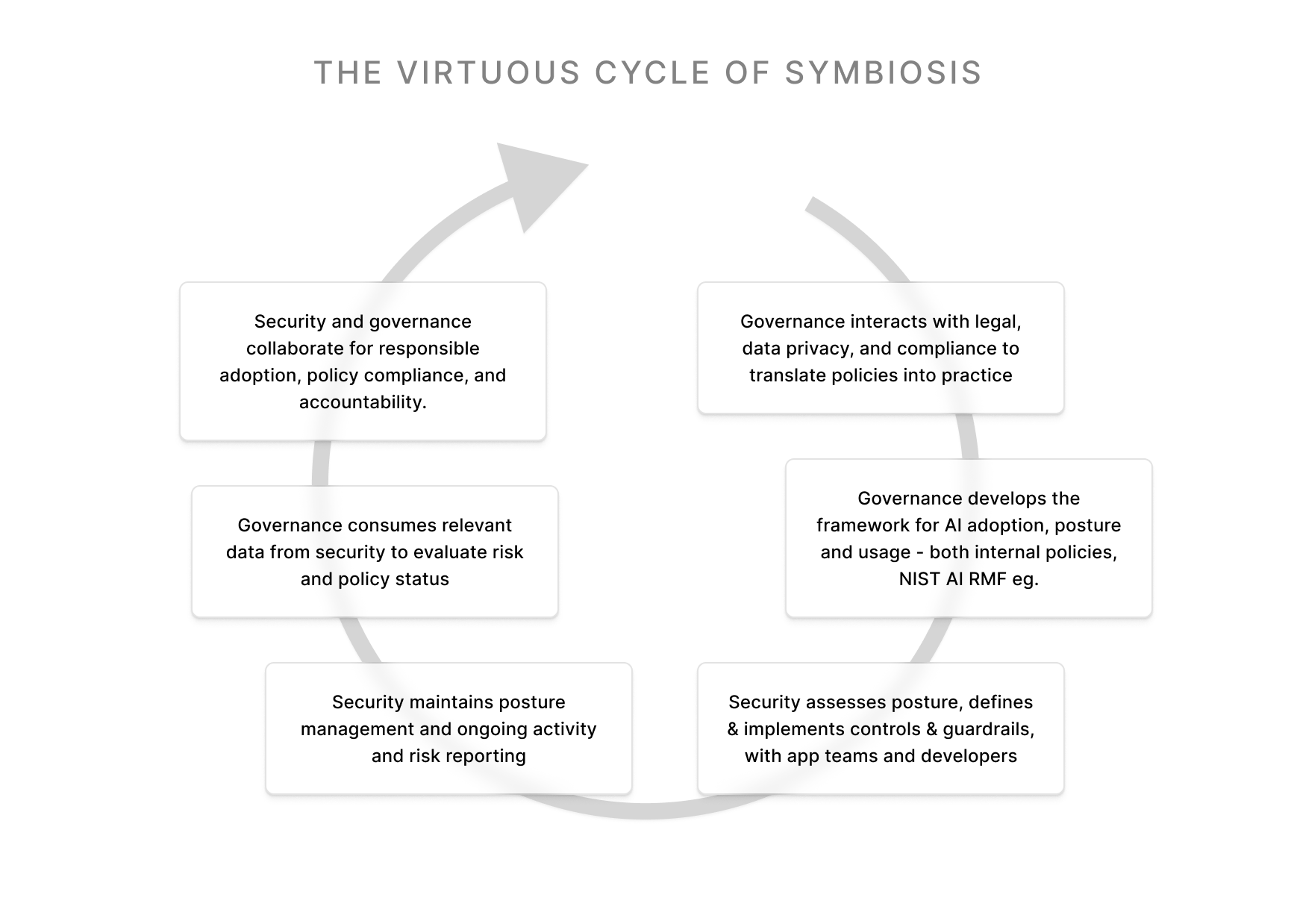

How Governance and Security Interact

AI security products generate valuable data, which is complementary to AI governance and provides the reliable evidence needed to guide compliance, ethics, and accountability. The interaction between governance and security can be understood as part of an AI risk management system:

- Define and refine guidelines

Regulations and compliance standards define what “safe” AI means, establishing clear boundaries between what is permitted and prohibited, whether through approving third-party AI systems for organizational use or by providing structured best-practice guidelines for developing homegrown AI. - Monitor and manage risk thresholds

Security controls enforce these requirements by restricting data exposure, access, and misuse, whether through controlled agent access or prompt-level contextual defenses. These controls are logged as security telemetry and fed into risk management systems. This telemetry not only enforces policies but also produces risk scores that governance teams can use to prioritize oversight and refine acceptable use thresholds. - Data-driven accountability

When anomalies or attacks are detected, security products provide forensic evidence, such as logs of adversarial prompts, unauthorized data access, or model manipulation attempts. Governance then uses this evidence to drive incident response, root-cause analysis, and regulatory reporting, ensuring accountability across the organization. - Common risk context

Employee training builds awareness of both ethical and technical risks, while cross-functional communication, supported by dashboards that translate security telemetry into governance-relevant insights, helps business leaders and technical teams share a common risk language. - Coverage of the AI lifecycle

Continuous monitoring ensures that both AI models and third-party systems are used responsibly and in compliance with organizational AI governance. Data from testing and validation phases also informs early lifecycle decisions, including vendor selection, go/no-go approvals, and retraining requirements. This ensures governance and security are integrated from design to deployment. - Limit oversight blind spots

Transparency and integrity across the lifecycle make both governance and security credible, while alignment with regulatory audits ensures organizations can demonstrate compliance with frameworks such as the EU AI Act and NIST AI RMF.

This creates a closed feedback loop: governance provides the framework and policies, security provides the enforcement and the relevant data for governance, and together they ensure responsible adoption and policy compliance.

What does this look like in practice?

- Protect Sensitive Data → Strengthen Governance Oversight

- Security: Apply controls to prevent user PII from being sent to third-party AI tools, and enforce prompt-level obfuscation and redaction.

- Governance: These protections ensure AI system design, development, and deployment, are in line for example to the NIST AI RMF ‘data privacy’ principles. The result is better auditability and more clear accountability for privacy compliance.

- Map AI Assets → Identify and Govern Risks

- Security: Build an AI asset inventory to track models, datasets, and workflows across the organization, while monitoring dependencies and model integrity.

- Governance: This visibility supports for example, the EU AI Act requirement to identify high-risk activities, conduct impact assessments, and define risk-based policies. It also reflects OWASP LLM Top 10 best practices by validating data origins and model trustworthiness.

- Restrict and Govern AI Agent Actions → Ensure Ethical and Secure Operations

- Security: Limit agent permissions, such as file access, code execution and external API calls, to reduce attack surfaces. Continuously scan for vulnerabilities, backdoors, or malicious behaviors introduced through supply chain compromise.

- Governance: Define clear policies for what agents can and cannot do, ensuring their actions remain aligned with organizational ethics and compliance obligations. This supports OWASP’s guidance on model poisoning (preventing compromised agents) for example, and echoes EU AI Act and the NIST AI RMF guidelines around operational risk management and ethical oversight.

Final Thoughts

AI security and AI governance reinforce each other. Security provides the guardrails, while governance sets the direction. Together, they create trust in AI use, ensure compliance, and enable safe innovation.

If you’re an executive, the key question isn’t “Do we have AI security?” or “Do we have AI governance?” It’s: “Do these two functions talk to each other every single week?”

Organizations that answer “yes” are the ones that will not only protect themselves from risk but also unlock AI as a true driver of long-term strategic value.