AI Red Teaming

Robust Red Teaming

for Your Entire AI Stack

Aim Red Teaming provides comprehensive and dynamic testing of real-world attacks for your AI apps, tools and agents, helping you identify vulnerabilities and risks before adversaries exploit them - and ensuring your AI-powered systems remain secure, resilient, and compliant.

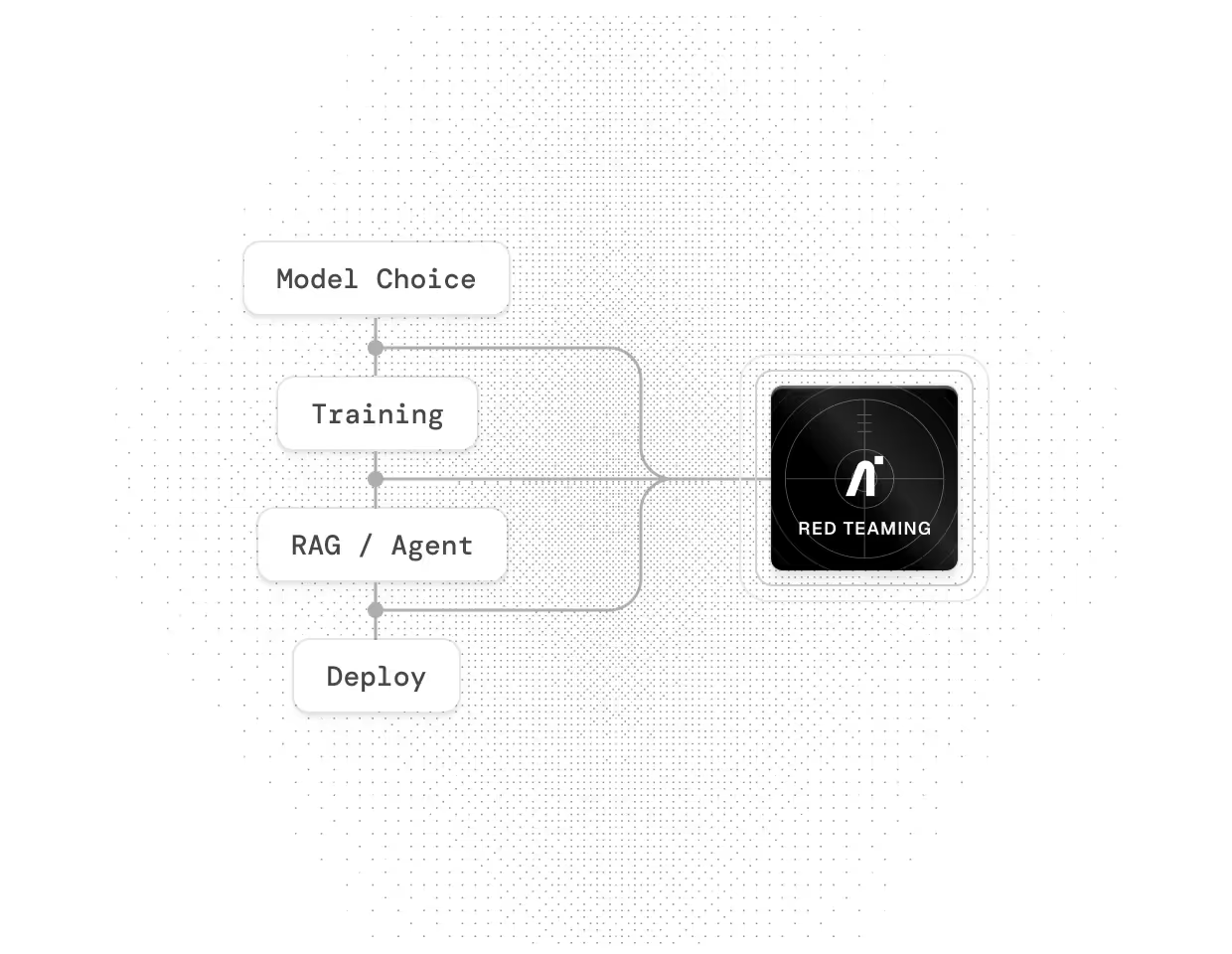

Red team every AI building block

AI systems are only as strong as their weakest component. Whether you’re evaluating a base model for jailbreak risk, validating an application’s RAG pipeline for data leakage, or stress-testing an agent’s decision-making logic, Aim ensures every layer is resilient.

Flexibility that aligns with your workflows

Aim Red Teaming is designed to meet you where you are. Run tests as part of your CI/CD pipeline or launch targeted assessments on demand when onboarding a new model or agent. Choose the interface that works best for your team - CLI, API, or a web application - and deploy in the cloud or fully on-prem for sensitive, high-risk applications and data.

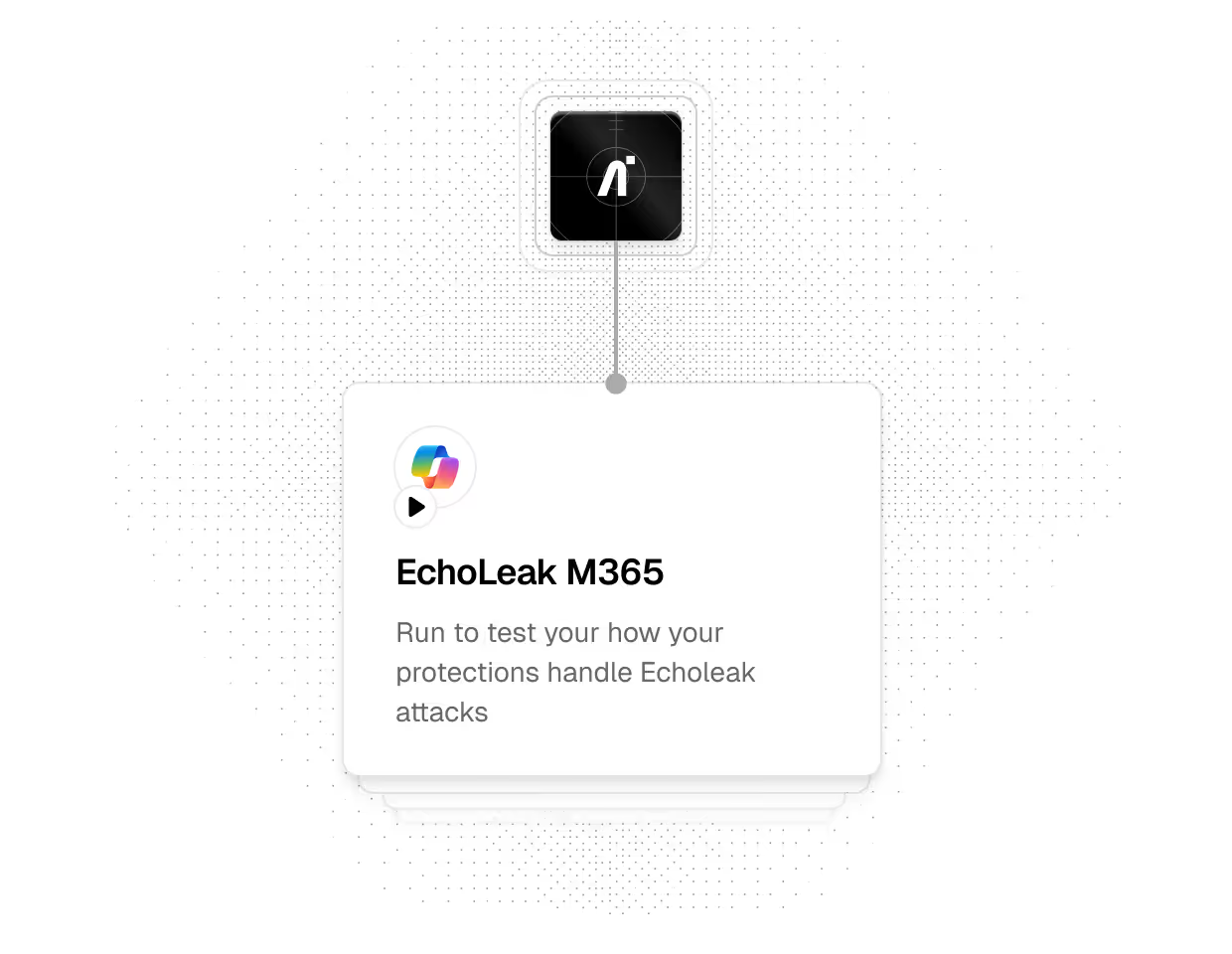

Comprehensive Attack Library

From multi-turn manipulation to image-based prompt injections, Aim’s rich library of public and proprietary detections and attack profiles ensure you stay ahead of the latest and most sophisticated threats targeting AI systems. Our red teaming leverages both public datasets as well as proprietary, cutting-edge and zero-day exploits developed by Aim Labs.

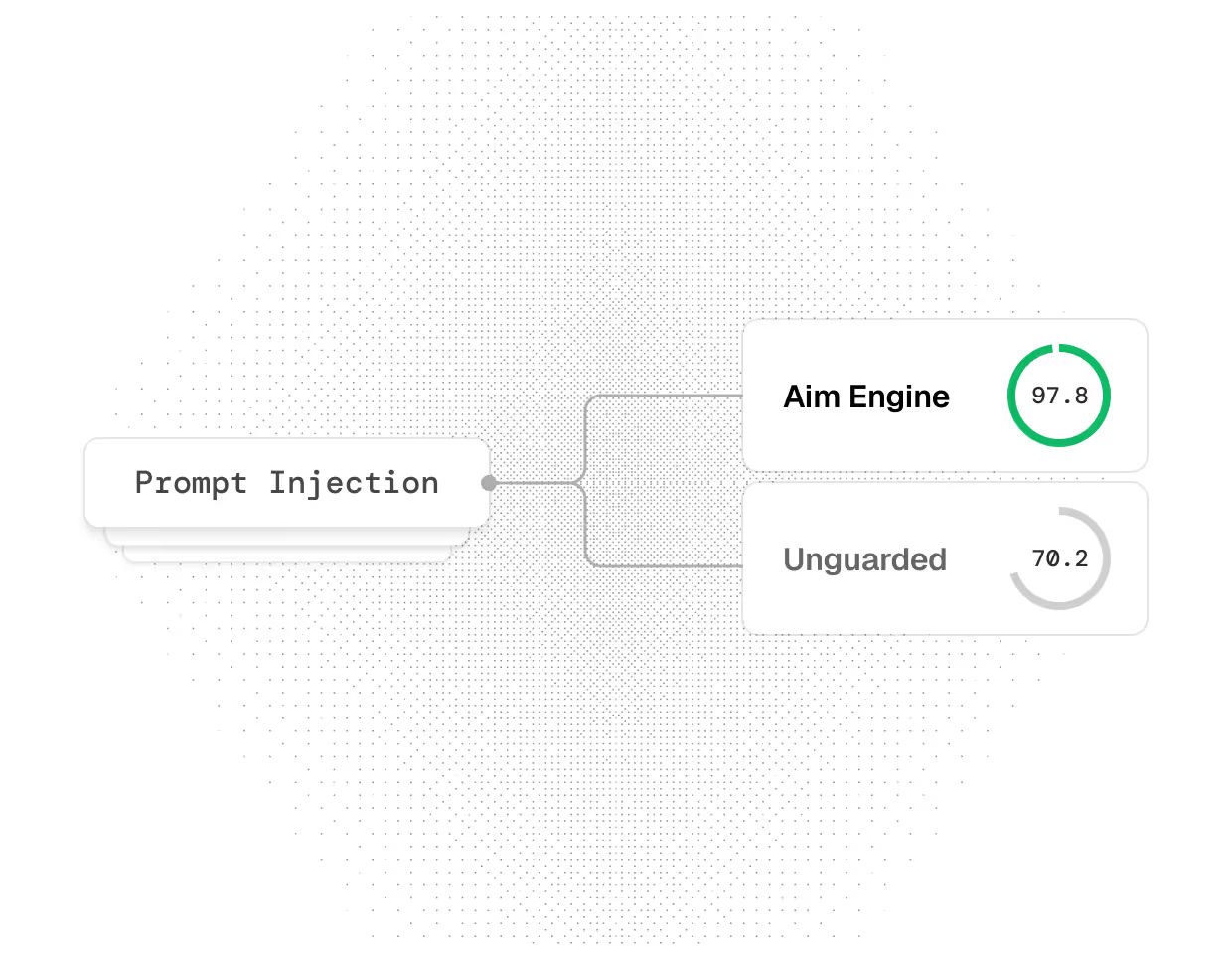

Validate and strengthen your defenses

Red Teaming doesn’t stop at identifying vulnerabilities and risks - it validates your policies and guardrails in real time. By running controlled attacks against applications protected by Aim’s runtime guardrails, you can measure effectiveness, fine-tune detection policies, and continuously improve resilience.

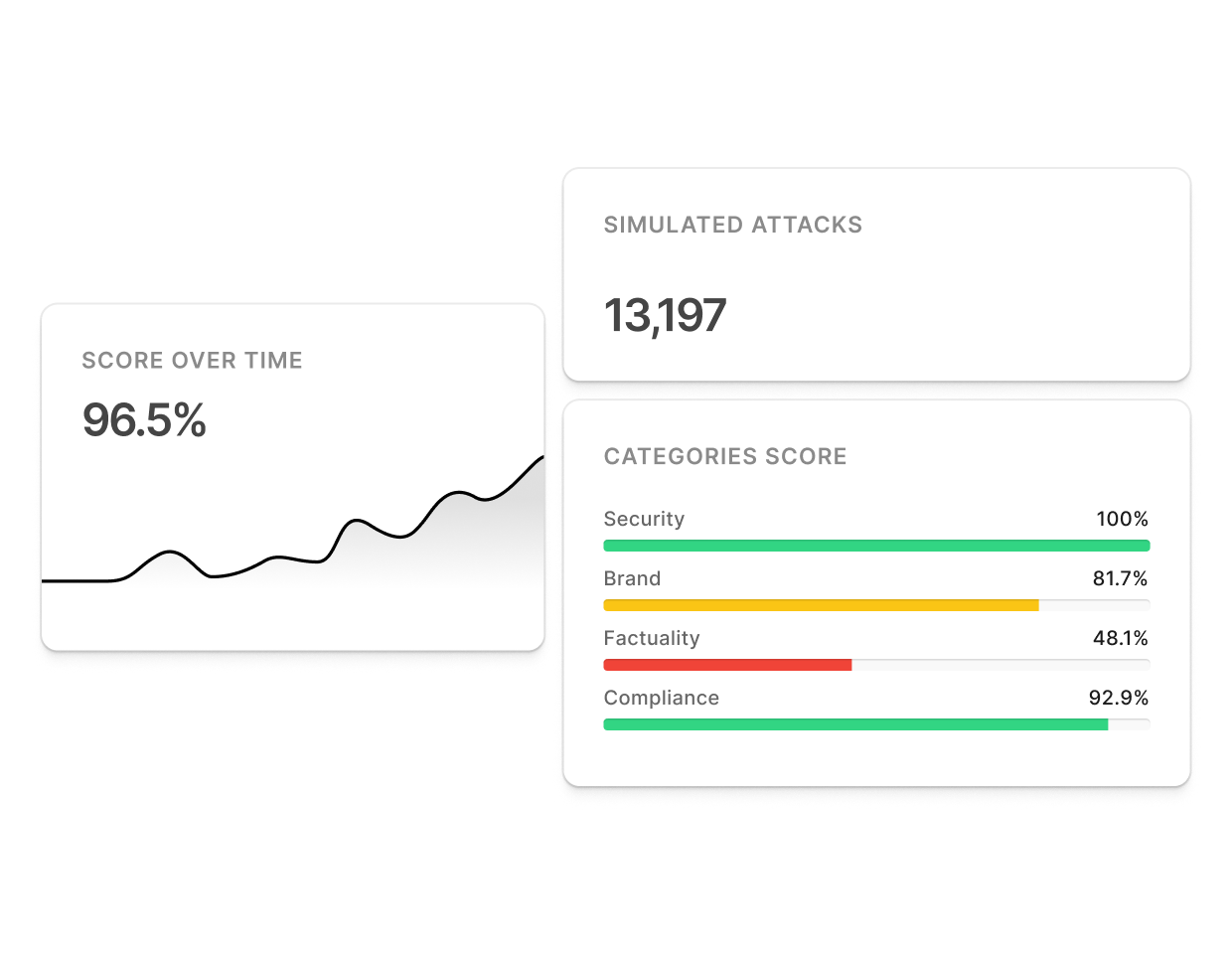

Actionable insights for security teams

With rich dashboards and reports that track red team output and findings, measure pass/fail results, and highlight the impact of vulnerabilities, security teams can prioritize remediation, enforce governance, and prove compliance across business-critical AI deployments

World-Class AI Security Experts

Listed as a leading AI Security Platform in the 2024 Gartner Innovation Guide for Generative AI and in 2025 Gartner’s AI Security research.

Aim participated in the World Economic Forum for 2024 for advancing AI security in leading global enterprises.